As I've posted previously, one of the newest hats I now wear is that I'm now the national program manager for EPA's Facility Registry System (FRS), where I am collecting and managing locational data for 2.9 million unique sites and facilities across states, tribes, and territories - I'm certainly excited about being able to contribute some good ideas toward enhancing its' capabilities, holdings, and collaborating and integrating with others across government.

A large part of this is data aggregated from other sources, such as data collected and maintained by state and tribal partners, EPA program offices and others, and then shared out via such means as EPA's Exchange Network. Historically, FRS has done what it can to improve data quality on the back end, by providing a locational record which aggregates up from the disparate underlying records, with layers of standardization, validation, verification and correction algorithms, as well as working with a national network of data stewards. This has iteratively resulted in vast improvements to the data, correcting common issues such as reversed latitude and longitude values, omitted signs in longitudes, partial or erroneous address fields and so on.

However, it still remains that there remain some issues with the data, with the weakness being in how data is collected, imposing limits on what kinds of backend correction can be performed. In most cases, data is captured via basic text fields. The further upstream that the data can be vetted and validated, the better, in particular, right at the point of capture, for example instances where facility operators themselves enter the data.

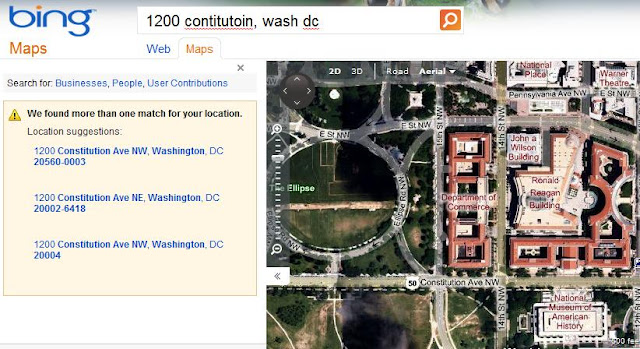

So, here is the notion - a toolbox of plug-and-play web services and reusable code to replace the basic free-text field, which allows real-time parsing and verification of data being entered. Part of that may involve using licensed commercial APIs to help with address verification and disambiguation, for example, the Bing Maps capability to deal with an incomplete address or one with a typo, such as "1200 Contitutoin, Wash DC" the web services would try to match these and return "Did you mean 1200 Constitution Avenue NW, Washington, DC?"

Between suggesting an alternative which attempts to correct partial and/or incorrect addresses, and providing an aerial photo as a visual cue for verification, it improves the likeihood that the user is going to doublecheck their entry and either accept the suggested alternative or type in a corrected address, along with having the visual verification in the aerial photo. Notionally, if the aerial photo view shows a big open field where there should be a large plastics plant, they would stop and wonder, and perhaps doublecheck the address they had entered.

That's certainly a good first step, and is something I'm currently looking to promote on the short term. In talking to some of my EPA stakeholders, they are very interested in this, and I will look at developing some easy-to-integrate code for them to use.

But, to think more long-range, let's take that further - from the large universe of facilities that I deal with, not all things populating "address" fields are conventional street addresses. For example, remote mining activities in western states might instead be represented on the PLSS system, such as "Sec 17, Twp 6W Range 11N", or rural areas might simply use "Mile 7.5 on FM 1325 S of Anytown" or "PR 155 13.5km west of Anytown".

Again, perhaps there are ways to improve this, a longer-term discussion, but certainly the ingredients exist. A first step might be to look at developing guidance on consistent ways to have folks enter this type of data, for example "Sec 17, Twp 6W Range 11N" versus S17-T6W-R11N, along with developing parsers that can understand and standardize the possible permutations that might be entered, including entry of section and meridian info, e.g. NW1/4 SW1/4 SE1/4 SEC 22 T2S R3E MDM for an entry that includes drilldown into quarter sections to identify a 10-acre parcel, also referencing the Mount Diablo Meridian.

Currently, there isn't any truly standardized way of entering and managing these, but perhaps there is a role in the surveying community toward standardized nomenclature to assist in database searching and indexing. Coincident with this is potential collaborative development of ways to approach parsing and interpreting nonstandardized entries, along with leveraging existing PLSS data and geocoders built toward translating these into locational extents, such as a bounding box, along with provisioning it with appropriate record-level metadata describing elements such as method of derivation and accuracy estimate.

In concert with this, obviously, should be an effort toward providing linkages to actual field survey polygonal data, as appropriate if it's a parcel-oriented effort (for example, for superfund site cleanup and brownfields), and where such data is available.

Similarly, one could collaboratively develop guidance and parsers for dealing with the route-oriented elements, for example "Mile 7.5 on FM 1325 S of Anytown" or "PR 155 13.5km west of Anytown" toward standardizing these types of fields as well - for example, whether or not to disambiguate or expand FM as "Farm to Market Route", what order to place elements consistently.

And then, one would want to leverage routing software to measure the distance along the given route from the given POI, toward providing a roughly-geocoded locational value to get in the ballpark. And again, one would want a web service that does this to return any appropriate metadata on source, error and so on.

PLSS locations, mileage-along-a-route locations, and things like this are just a sampling of the universe of possibilities. And as I point out, there are bits and pieces of tools that can do some of these things, but they are currently scattered and uncoordinated, and community-oriented, collaborative efforts can help to pull some of these together.

Atop these, as mentioned above one could also provide additional pieces, such as tools for visual verification, at the most basic level, or, if collection mandates permit, tools to allow the user to drop a pushpin on an aerial photo feature, drag a bounding box, or digitize a rough boundary - (and most ultimately of course, a means of entering and/or uploading survey data for field-located monumentation points, boundary topology, and record description data).

From a federal perspective, EPA is certainly not the only agency that needs some of these types of tools, and EPA is certainly not the only agency that needs internal policy and/or best practices guidance on how to deal with how these types of values are best represented in databases. It would make sense, from an Enterprise Architecture perspective, for the federal community to collaborate, along with state, tribal and local governments. Similarly, I would think that there are a lot of non-profits, academia and private sector entities that have a big stake in locational data improvement that could benefit from improved data that would be facilitated by such tools, along with benefitting from such tools for data collection themselves.

For my part, I will try to do what I can toward leading the charge on these, and to leverage any existing efforts already out there. Additionally, given the capabilities that FRS has, I am looking to continue to integrate across internal stakeholders as well as external agencies toward being able to aggregate, link and reshare, with a process where data is iteratively improved and upgraded collectively.

I'd certainly be interested in getting thoughts, ideas and perspectives from others on this.

Energy predictions claim that energy consumption and costs will double over the next five years. This is due to inflation, the increase in gas and oil prices, and the rise in energy costs overall. As a result, many organizations and companies are looking for creative energy management solutions to attempt to drive down energy usage and costs. Best Energy tracking software service

This is the information I am looking for. Hope you bring more things related to Digital Map. Thanks a lot!Great information.